Q&A: UofL AI safety expert says artificial superintelligence could harm humanity

October 2, 2024

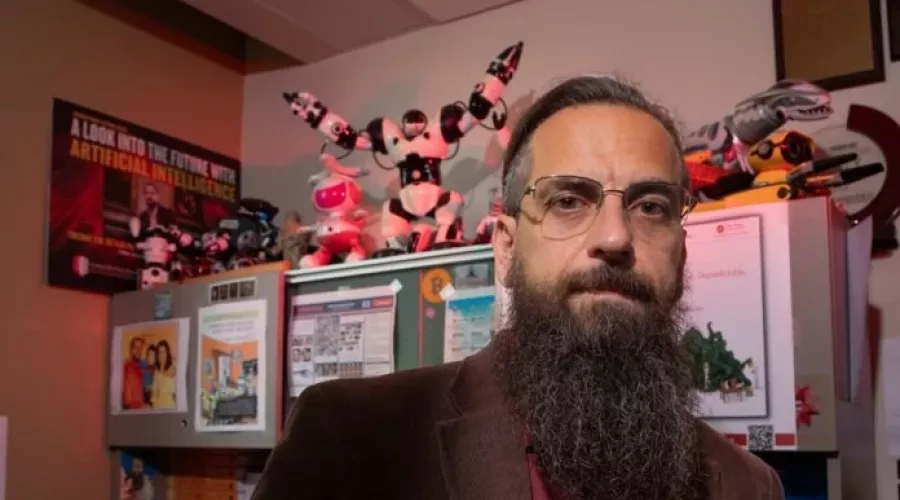

Roman Yampolskiy knows a thing or two about artificial intelligence (AI). A University of Louisville associate professor of computer science, he conducts research into futuristic AI systems, superintelligent systems and general AI. Yampolskiy coined the term “AI safety” in a 2011 publication and was one of the first computer scientists to formally research the field of AI safety, which is focused on preventing harmful actions by AI systems. He is listed among the top 2% of cited researchers in the world.

Technology companies are racing to develop artificial general intelligence – systems that can learn, respond and apply knowledge at levels comparable to humans in most domains – or even superintelligence, systems that far exceed human capabilities in a wide range of tasks. They hope these systems will offer immense potential to solve human health problems, solve enduring social issues or relieve human workers of mundane tasks. AI experts surveyed estimated that artificial general intelligence (AGI) or superintelligence is likely to be reality within 2 to 30 years.

Yampolskiy has concerns about this powerful technology, however. His research indicates that these systems cannot be controlled, leaving a high probability that a superintelligent AI system could do immense harm to its human creators, whether of its own volition, through a coding mistake or under malicious direction. It might develop a pathogen that could wipe out the human population or launch a nuclear war, for example. Without a mechanism to control these systems, Yampolskiy believes AI has a high chance of causing very bad outcomes for the human race. For this reason, he strongly advocates that development of the technology should be slowed or suspended until AI safety can be assured and controls established.

Yampolskiy recently published a book, “AI: Unexplainable, Unpredictable, Uncontrollable,” in which he explains why he believes it is unlikely we will be able to control such systems.

UofL News sat down with Yampolskiy to learn more about his concerns and what might prevent an AI catastrophe.

UofL News: What led you to research AI safety?

Roman Yampolskiy: My PhD [2008] was on security for online poker. At the time, bots were a common nuisance for online casinos, so I developed some algorithms to detect bots to prevent them from participating. But then I realized, they are only going to get better. They are going to get much more capable, and this area will be very important once we start seeing real progress in AI.

We were looking at things 12 or 13 years ago that people are just now proposing. It was science fiction at the time. There was no funding, no journals and no conferences on this stuff.

UofL News: What are your concerns with the development of advanced AI?

Yampolskiy: Historically, AI was a tool, like any other technology. Whether it was good or bad was up to the user of that tool. You can use a hammer to build a house or kill someone. The hammer is not in any way making decisions about it.

With advanced AI, we are switching the paradigm from tools to agents. The software becomes capable of making its own decisions, working independently, learning, self-improving, modifying. How do we stay in control? How do we make sure the tool doesn’t become an agent that does something we don’t agree with or don’t support? Maybe something against us. Maybe something we cannot undo because it is so impactful in the world, controlling nuclear plants, space flight or military applications. Once you deploy those systems, there is no undoing that. How will we guarantee that no matter how capable those systems become, how independent, we still have a say in what happens to us and for us?

I don’t think it’s possible to indefinitely control superintelligence. By definition, it’s smarter than you. It learns faster, it acts faster, it will change faster. You will have malevolent actors modifying it. We have no precedent of lower capability agents indefinitely staying in charge of more capable agents.

Until some company or scientist says ‘Here’s the proof! We can definitely have a safety mechanism that can scale to any level of intelligence,’ I don’t think we should be developing those general superintelligences.

We can get most of the benefits we want from narrow AI, systems designed for specific tasks: develop a drug, drive a car. They don’t have to be smarter than the smartest of us combined.

UofL News: What harmful outcomes could result from artificial general superintelligence?

Yampolskiy: There are three different types of risks. One type is existential risk where everyone dies.

Somewhat worse is suffering risks where everyone wishes they were dead.

Somewhat “nicer” is ikigai risk – where you have no meaning. You have nothing to contribute to superintelligence: you are not a better mathematician, not a better philosopher, not a better poet. Your life is kind of pointless. For many people, their creative output is the meaning they derive in this world. So, we will have a strong paradigm shift in terms of leisure time and society as a whole. That is the best outcome of three.

UofL News: What is the best-case scenario?

Yampolskiy: I’m wrong! I’m completely wrong. It’s actually possible to control it, we figure it out in time and we have this utopia-like future where the biggest problem is figuring out what to do with all our wealth and health and spare time.

UofL News: Do a lot of other AI experts agree that action is needed?

Yampolskiy: We had open letters signed by thousands of scientists saying we think this is as dangerous as nuclear weapons and we need to have government regulation. And not just quantity but quality – top scientists, Nobel prize winners – all coming on board agreeing with our message and have signed a statement that mitigating AI risks should be a global priority.

UofL News: What should we do to prevent these negative outcomes?

Yampolskiy: There is a lot of research on some aspects of impossibility results – showing that it is impossible to explain, predict and control the systems. There is a lot of research in trying to understand how large neural networks function. I support it fully; there should be more of that.

As individuals, you can vote for politicians who are knowledgeable about such things. We can have more scientists and engineers in office.

If you don’t engage with this technology, you don’t provide free training and labelling data for it. If you don’t pay subscription services, you don’t give them money to buy more compute. They are less likely to be able to raise funding as quickly. You are buying us time. If you insist on pointless government red tape and regulation, it slows them down. It takes money from their computing budget into the legal budget. Now they have to deal with this meaningless government regulation, which is usually undesirable, but here I strongly encourage it.

UofL News: What do you think about instructors and students using ChatGPT, Bing or other generative AI technologies?

Yampolskiy: Previous answer notwithstanding, if you don’t embrace the use of existing generative AI, you are going to be obsolete. You are competing with people who do have knowledge and ability to use those tools and you will not be competitive, so you really have no choice. You can be Amish-like, but that’s not what college is all about.

UofL News: What are you working on now to improve AI safety and make it possible to control these systems?

Yampolskiy: Continuing with impossibility results. We have many tools we would need to try to control the systems, so understanding what tools would be accessible to us is what we hope for. I am trying to understand even in theory to what degree each tool is accessible. We worry about testing, for example. Can you successfully test general intelligence?

UofL News: What else should people know about the issue of uncontrollable artificial intelligence?

Yampolskiy: We haven’t lost until we have lost. We still have a great chance to do it right and we can have a great future. We can use narrow AI tools to cure aging, an important problem and I think we are close on that front. Free labor, physical and cognitive, will give us a lot of economic wealth to do better in many areas of society which we are struggling with today.

People should try to understand the unpredictable consequences and existential risks of bringing AGI or superintelligent AI into the real world. Eight billion people are part of this experiment they never consented to – not just that they have not consented, they cannot give meaningful consent because nobody understands what they are consenting to. It’s not explainable, it’s not predictable, so by definition, it’s an unethical experiment on all of us.

So, we should put some pressure on people who are irresponsibly moving too quickly on AI capabilities development to slow down, to stop, to look in the other direction, to allow us to only develop AI systems we will not regret creating.

Betty Coffman is a communications coordinator focused on research and innovation at UofL. A UofL alumna and Louisville native, she served as a writer and editor for local and national publications and as an account services coordinator and copywriter for marketing and design firms prior to joining UofL’s Office of Communications and Marketing.

Related News